If you’re part of an organization running a live streaming service and you’ve faced an unexpected surge in traffic or an outage, your team has probably had to scramble to troubleshoot and fix delays. This interruption can become a significant problem for viewers and a huge challenge for your team.

The root of the issue often lies in your cloud provider’s ability to handle sudden surges in traffic effectively and reroute traffic in the case of an outage. If the cloud provider fails to scale resources quickly enough to accommodate the increased demand, the streaming platform may buckle under pressure, resulting in downtime, laggy streams, or even complete crashes.

By investing in scalable infrastructure, like distributed servers and cloud-based solutions and leveraging edge computing and a powerful content delivery network (CDN), you can ensure that users enjoy uninterrupted live experiences, content reaches the viewer reliably, and streaming services maintain their reputations in an increasingly competitive world.

In this blog, we’ll walk through the tools many of our customers leverage for reliable streaming.

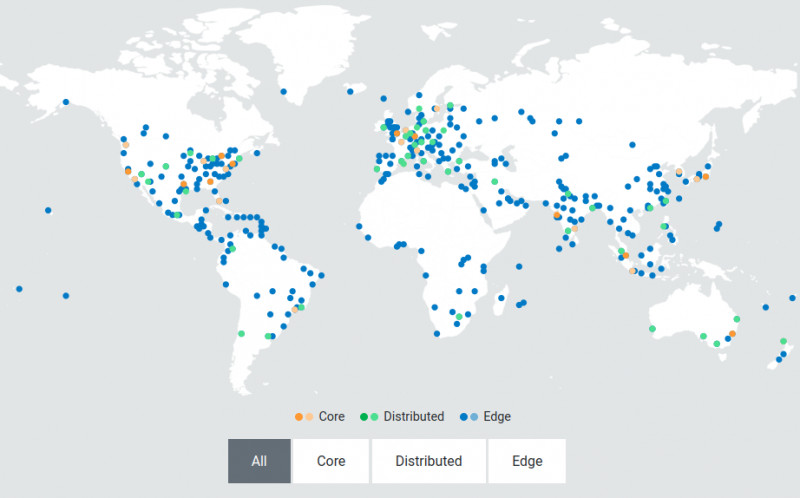

Taking Advantage of Akamai’s Points of Presence

A CDN like Akamai provides decentralized and localized edge servers strategically positioned in close proximity to end users. Edge servers act as points of presence (PoPs) that serve as intermediary hubs between the central data center and the user’s device. When you request a video, the content is delivered from the nearest edge server rather than traveling all the way from the central data center so when there is high traffic, these servers can split and distribute content simultaneously to users in different regions without delays.

The above image shows Akamai’s distributed network. This architecture is designed to scale dynamically to handle traffic spikes and fluctuations in demand. This elasticity ensures that the infrastructure remains responsive and resilient, even under the most demanding conditions. This proximity significantly reduces the time it takes for content to reach your device, resulting in lower latency and a smoother streaming experience for end users.

How Akamai helps live streaming services achieve low latency

To deliver a quality experience to your online viewers, you need to ensure that your VMs aren’t adding additional latency to your live streams. Akamai supports 10-second end-to-end, hand-wave latency for live linear and live streams. Here’s how Akamai helps live streaming services achieve low latency:

- Live transcoding in real time

A live transcoding system needs to scale to handle peak video loads in real time. Any latency added during transcoding is ultimately passed on to the end user, resulting in a less-than-optimal viewing experience. - Small segment sizes

Akamai’s architecture was built to reliably handle small segment sizes (down to 2 seconds) for HTTP-based streaming to enable players to quickly switch down on bandwidth drops, prevent player stalls, and effectively reduce client-side buffers. - HTTP chunked encoded transfers

Supporting chunked encoded transfers from ingest through to the Edge to initiate transfers as soon as the data is available helps minimize latencies. - Prefetching from the Edge

Edge servers will pre-fetch the next set of segments for a particular bitrate and cache it locally as the previous segment is already received and being played. This allows segments to be readily available and reduces the risk of additional latency.

Let’s dive into how one of our customers used Akamai to scale their live streaming with this process.

How to scale your live streaming service at the edge

Our live streaming mechanism is achieved through Akamai’s Media Services Live (MSL). MSL is designed to efficiently deliver live video content to global audiences by leveraging Akamai’s extensive network of edge servers distributed across diverse geographic locations.

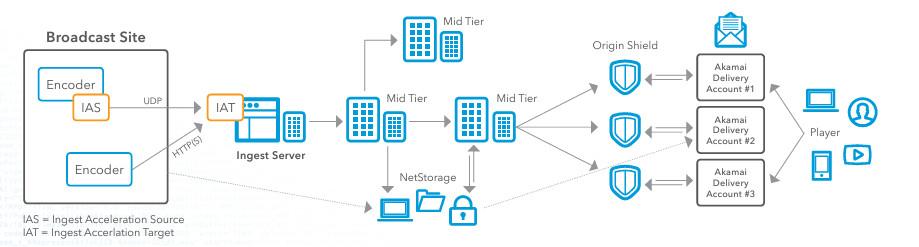

Let’s take a look at the above reference architecture that details both the ingest workflow and the delivery workflow for a live streaming event with Akamai. If you are looking to improve your live streaming services, this is how I would suggest setting up MSL to handle live streaming of an event.

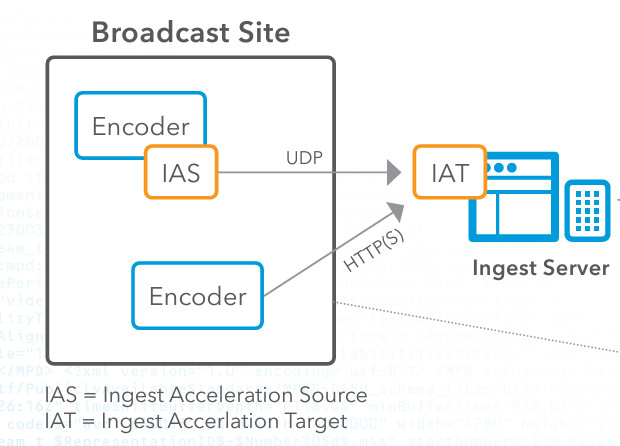

Step 1: Encoding and ingestion

First, you need to capture the live video content at the broadcast site. Once you have the content, you need to set up an encoder to convert the raw video feed into a digital format, like HTTP Live Streaming (HLS), or Real-Time Messaging Protocol (RTMP). Then, you’ll use Akamai’s IAS (Ingest Acceleration Source), which is downloaded through Akamai’s customer portal. IAS takes the stream from the encoder and forwards it over our proprietary UDP transport protocol. Then, you need to configure the IAS to receive the stream from the encoder.

Next, you need to set up the Ingest Acceleration Target (IAT) on Akamai’s network and ensure that the IAT is configured to decode the stream back to its original format. Then, we connect the IAT to Akamai’s entry point software for further processing. In this encoding and ingestion phase, many people face the problem of packet loss. Live video streaming is highly sensitive to packet loss, which can degrade the quality of the stream, cause buffering, and lead to an unsatisfactory viewing experience. However, customers who use Akamai for live streaming don’t experience this because the UDP-based acceleration technology that Akamai uses helps mitigate the effects of packet loss by ensuring data packets are delivered more reliably and quickly, even over unstable network conditions. Another reason why this technology is crucial in the live streaming process is that Traditional TCP-based transmission can suffer from high latency due to its congestion control and error correction mechanisms. Live streaming requires low latency to ensure that viewers receive the content in real time. Akamai’s UDP-based acceleration provides higher throughput and lower latency by minimizing these overheads, which is crucial for live content where timely delivery is essential.

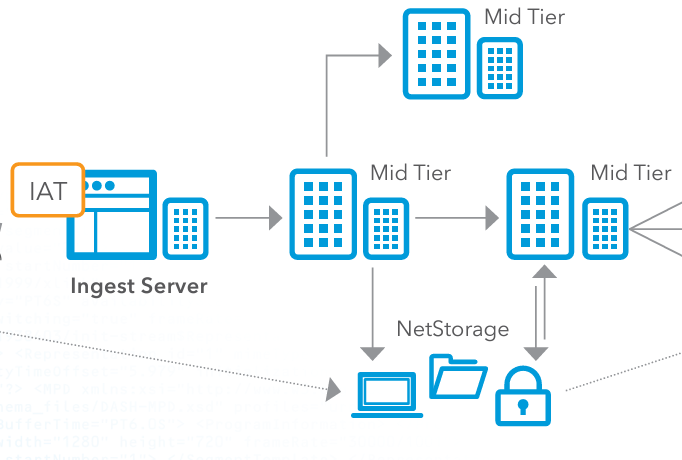

Step 2: Mid-Tier Distribution and Optional Storage

Next, you need to direct the processed video stream to Akamai’s mid-tier servers. These servers act as intermediaries between the ingest server and the edge delivery network. The mid-tier servers further distribute the video stream, ensuring that it is replicated and cached at multiple points across the network to balance the load and improve redundancy. When a cloud provider handles live streaming, it’s important that this load balancing is handled correctly, because if not, you will get bottlenecks in the process. When you use Akamai’s edge delivery network, you’re preventing any single server from becoming a bottleneck, ensuring that no individual server is overwhelmed by too many requests. This becomes especially important during high-traffic events.

Optionally, you can store the video content in Akamai’s NetStorage system. NetStorage ensures that content is always available and can be retrieved quickly if needed. This provides a scalable, secure storage solution that can serve as a backup or for on-demand playback.

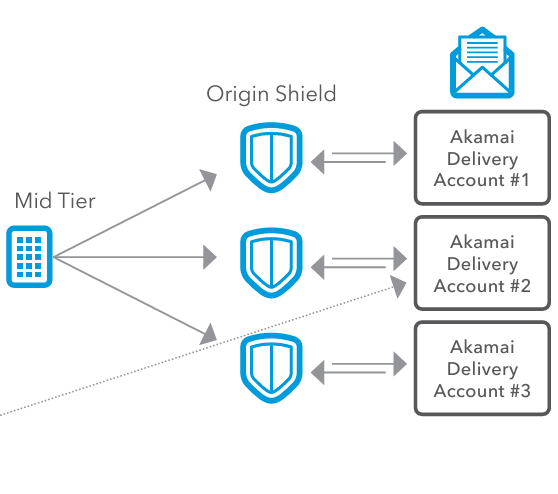

Step 3: Origin Shield

Next, we send the video to Origin Shield, which helps in managing traffic spikes by acting as a buffer between the ingest servers and the Akamai CDN. During peak times or unexpected surges in viewership, Origin Shield can handle the increased load, preventing the ingest servers from becoming overwhelmed. This ensures that the system can accommodate large numbers of viewers without performance degradation. Origin Shield also optimizes cache efficiency. By adding an additional layer of caching, Origin Shield reduces the frequency with which requests need to go back to the origin servers. This decreases the load on the origin servers, conserves bandwidth, and speeds up content delivery to end users. For the end users, this translates to faster access to content, reducing latency and enhancing the viewer experience.

Step 4: Delivery Configuration and End User Delivery

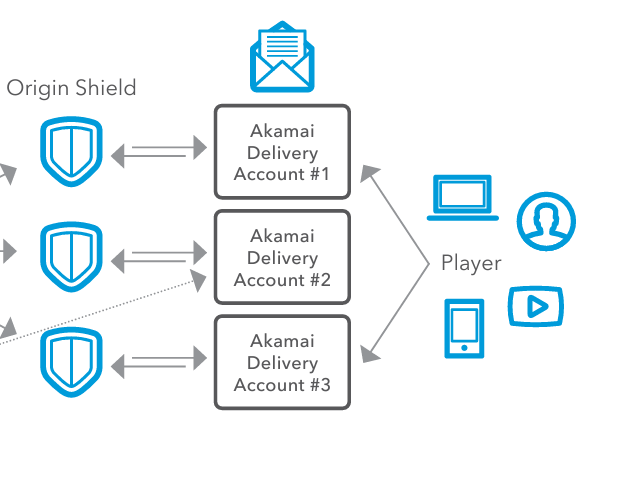

Now, it’s time to distribute the content. When you distribute the content globally to different regions, there may be varying network conditions, regulatory requirements, or viewer preferences. To solve this, our customers use Akamai Delivery Accounts. Akamai Delivery Accounts correspond to different configurations and setups for delivering the content and allow content providers to configure different settings, like caching policies, security protocols, and delivery optimizations specifically for each region. Each delivery account can have specific settings tailored to the needs of various regions, devices, or types of content. This is important because content providers need to efficiently and flexibly distribute their content across multiple delivery channels.

Finally, the video content is delivered to the end users through a variety of devices such as computers, smartphones, tablets, and smart TVs. The edge servers deliver the video stream to the player’s device with minimal latency, ensuring a smooth and high-quality viewing experience.

Conclusion

There are so many things you have to account for when building a live streaming solution. First, You have to account for latency and buffering, especially during live events where real-time delivery is critical. High latency can lead to delays in the live feed, which will make your end users very unhappy. You also need to architect for packet loss, which can degrade video quality or cause interruptions. With packet loss, your end users will struggle with inconsistent and unreliable streams, leading to a poor viewing experience. Lastly, you have to be able to scale and load balance. You might face server overloads and crashes, resulting in service outages and an inability to handle peak loads efficiently. Akamai’s optimized delivery network solves all of these problems through its UDP-based acceleration, its ingestion network, its scalable CDN.

Using Akamai for live streaming ensures efficient, scalable, and low-latency delivery of live video streams, providing a great experience for end users. By bringing processing power closer to the edge of the network with Akamai, streaming services can optimize performance, and enhance scalability.

To follow a step-by-step tutorial on video transcoding, follow this link to convert an mp4 file to HLS format. You can also read our docs on the Media Services Live Stream Provisioning API.

To learn more about how to implement this yourself, connect with us on Twitter or LinkedIn, or subscribe to our YouTube channel.

If you or your organization is considering optimizing its video transcoding and Kubernetes solutions, you can try Linode’s solutions by signing up to get $100 in free credits.

Comments