Product docs and API reference are now on Akamai TechDocs.

Search product docs.

Search for “” in product docs.

Search API reference.

Search for “” in API reference.

Search Results

results matching

results

No Results

Filters

Deploy Apache Spark through the Linode Marketplace

Quickly deploy a Compute Instance with many various software applications pre-installed and ready to use.

Apache Spark is a powerful open-source unified analytics engine for large-scale data processing. It provides high-level APIs in Java, Scala, Python, and R, and an optimized engine that supports general execution graphs. Spark is designed for both batch and streaming data processing, and it’s significantly faster than traditional big data processing frameworks.

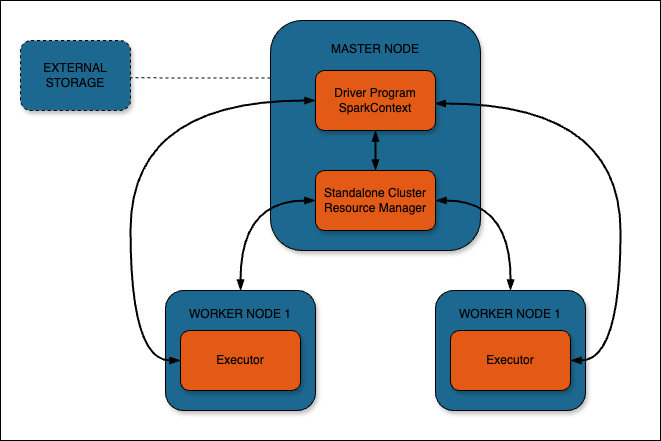

This cluster is deployed with Apache Spark in standalone cluster mode and consists of a master node along with two worker nodes. The standalone cluster manager is a simple way to run Spark in a distributed environment, providing easy setup and management for Spark applications.

Scala, a multi-paradigm programming language, is integral to Apache Spark. It combines object-oriented and functional programming in a concise, high-level language that runs on the Java Virtual Machine (JVM). Spark itself is written in Scala, and while Spark supports multiple languages, Scala provides the most natural and performant interface to Spark’s APIs.

NGINX is installed on the master node as a reverse proxy to the worker nodes. The user interface URL is the domain (or rDNS value if no domain was entered). The workers are available via this reverse proxy setup. To access the UI on the master, you will need to provide the username and password that are specified during the cluster deployment. These credentials are also available at /home/$USER/.credentials for reference.

A Let’s Encrypt Certificate is installed in the NGINX configuration. Using NGINX as a reverse proxy allows for both authentication to the front-end UI and a simple means for renewing the Let’s Encrypt certificates for HTTPS.

The minimum RAM requirement for the worker nodes is 4GB RAM to ensure that jobs can run on the workers without encountering memory constraints. This configuration allows for efficient processing of moderately sized datasets and complex analytics tasks.

Cluster Deployment Architecture

Deploying a Marketplace App

The Linode Marketplace lets you easily deploy software on a Compute Instance using Cloud Manager. See Get Started with Marketplace Apps for complete steps.

Log in to Cloud Manager and select the Marketplace link from the left navigation menu. This displays the Linode Create page with the Marketplace tab pre-selected.

Under the Select App section, select the app you would like to deploy.

Complete the form by following the steps and advice within the Creating a Compute Instance guide. Depending on the Marketplace App you selected, there may be additional configuration options available. See the Configuration Options section below for compatible distributions, recommended plans, and any additional configuration options available for this Marketplace App.

Click the Create Linode button. Once the Compute Instance has been provisioned and has fully powered on, wait for the software installation to complete. If the instance is powered off or restarted before this time, the software installation will likely fail.

To verify that the app has been fully installed, see Get Started with Marketplace Apps > Verify Installation. Once installed, follow the instructions within the Getting Started After Deployment section to access the application and start using it.

Configuration Options

- Supported distributions: Ubuntu 24.04 LTS

- Suggested minimum plan: 4GB RAM

Spark Options

- Linode API Token: The provisioner node uses an authenticated API token to create the additional components to the cluster. This is required to fully create the Spark cluster.

Limited Sudo User

You need to fill out the following fields to automatically create a limited sudo user, with a strong generated password for your new Compute Instance. This account will be assigned to the sudo group, which provides elevated permissions when running commands with the sudo prefix.

Limited sudo user: Enter your preferred username for the limited user. No Capital Letters, Spaces, or Special Characters.

Locating The Generated Sudo Password A password is generated for the limited user and stored in a

.credentialsfile in their home directory, along with application specific passwords. This can be viewed by running:cat /home/$USERNAME/.credentialsFor best results, add an account SSH key for the Cloud Manager user that is deploying the instance, and select that user as an

authorized_userin the API or by selecting that option in Cloud Manager. Their SSH pubkey will be assigned to both root and the limited user.Disable root access over SSH: To block the root user from logging in over SSH, select Yes. You can still switch to the root user once logged in, and you can also log in as root through Lish.

Accessing The Instance Without SSH If you disable root access for your deployment and do not provide a valid Account SSH Key assigned to theauthorized_user, you will need to login as the root user via the Lish console and runcat /home/$USERNAME/.credentialsto view the generated password for the limited user.Spark cluster size: The size of the Spark cluster. This cluster is deployed with a master node and two worker nodes.

Spark user: The username you wish to use to log in to the Spark UI.

Spark UI password: The password you wish to use to log in to the Spark UI.

") within any of the App-specific configuration fields, including user and database password fields. This special character may cause issues during deployment.Getting Started After Deployment

Spark UI

Once the deployment is complete, visit the Spark UI at the URL provided at /etc/motd. This is either the domain you entered when deploying the cluster or the reverse DNS value of the master node.

The Spark Cluster needs access to external storage such as Amazon S3 or S3-compatible storage, HDFS, Azure Blob Storage, Apache HBase, or your local filesystem. For more details on this, see Integration With Cloud Infrastructures.

Authentication

The credentials to log in to the Spark UI can be found in the home directory of the sudo user created on deployment: /home/$SUDO_USER/.credentials. For example, if you created a user called admin, the credentials file will be found in /home/admin/.credentials.

Spark Shell

The spark-shell is included with the installation. The spark-shell is an interactive command-line shell provided by Apache Spark that allows you to interact with and perform data queries on a Spark cluster in real-time.

Software Included

The Apache Spark Marketplace App installs the following software on your Linode:

| Software | Version | Description |

|---|---|---|

| Apache Spark | 3.5 | Unified analytics engine for large-scale data processing |

| Java OpenJDK | 11.0 | Runtime environment for Spark |

| Scala | Latest | Programming language that Spark is built with, providing a powerful interface to Spark’s APIs |

| NGINX | Latest | High-performance HTTP server and reverse proxy |

| UFW | Uncomplicated Firewall for managing firewall rules | |

| Fail2ban | Intrusion prevention software framework for protection against brute-force attacks |

More Information

You may wish to consult the following resources for additional information on this topic. While these are provided in the hope that they will be useful, please note that we cannot vouch for the accuracy or timeliness of externally hosted materials.

This page was originally published on