Linode is thrashing and OOMing randomly.. help

First the ugly OOM output

ck:0kB pagetables:0kB unstable:0kB bounce:0kB writebacktmp:0kB pagesscanned:0 all_unreclaimable? y

es

lowmem_reserve[]: 0 700 754 754

Normal free:5060kB min:3348kB low:4184kB high:5020kB activeanon:339984kB inactiveanon:340208kB act

ivefile:0kB inactivefile:0kB unevictable:1408kB isolated(anon):0kB isolated(file):0kB present:7172

88kB mlocked:1408kB dirty:0kB writeback:0kB mapped:604kB shmem:28kB slabreclaimable:7776kB slabunr

eclaimable:8448kB kernelstack:1128kB pagetables:4404kB unstable:0kB bounce:0kB writebacktmp:0kB pa

gesscanned:0 allunreclaimable? yes

lowmem_reserve[]: 0 0 428 428

HighMem free:116kB min:128kB low:192kB high:256kB activeanon:17624kB inactiveanon:17932kB active_f

ile:0kB inactive_file:0kB unevictable:3136kB isolated(anon):0kB isolated(file):0kB present:54868kB m

locked:3136kB dirty:0kB writeback:0kB mapped:3080kB shmem:0kB slabreclaimable:0kB slabunreclaimabl

e:0kB kernelstack:0kB pagetables:0kB unstable:0kB bounce:0kB writebacktmp:0kB pagesscanned:2 all

unreclaimable? yes

lowmem_reserve[]: 0 0 0 0

DMA: 64kB 28kB 716kB 832kB 864kB 7128kB 5256kB 0512kB 01024kB 02048kB 0*4096kB = 3096kB

Normal: 6394kB 2978kB 916kB 032kB 064kB 0128kB 0256kB 0512kB 01024kB 02048kB 0*4096kB = 50

76kB

HighMem: 64kB 18kB 016kB 332kB 064kB 0128kB 0256kB 0512kB 01024kB 02048kB 0*4096kB = 128kB

56664 total pagecache pages

55697 pages in swap cache

Swap cache stats: add 17100319, delete 17044622, find 7567477/9043262

Free swap = 0kB

Total swap = 524284kB

198640 pages RAM

13826 pages HighMem

6639 pages reserved

14545 pages shared

188067 pages non-shared

Out of memory: Kill process 3448 (httpd) score 41 or sacrifice child

Killed process 3448 (httpd) total-vm:74716kB, anon-rss:26132kB, file-rss:1120kB

Processes running

[root@li21-298 ~]# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.0 2208 492 ? Ss 19:34 0:00 init [3]

root 2 0.0 0.0 0 0 ? S 19:34 0:00 [kthreadd]

root 3 0.0 0.0 0 0 ? S 19:34 0:00 [ksoftirqd/0]

root 4 0.0 0.0 0 0 ? S 19:34 0:00 [kworker/0:0]

root 5 0.0 0.0 0 0 ? S 19:34 0:00 [kworker/u:0]

root 6 0.0 0.0 0 0 ? S 19:34 0:00 [migration/0]

root 7 0.0 0.0 0 0 ? S 19:34 0:00 [migration/1]

root 9 0.0 0.0 0 0 ? S 19:34 0:00 [ksoftirqd/1]

root 10 0.0 0.0 0 0 ? S 19:34 0:00 [migration/2]

root 12 0.0 0.0 0 0 ? S 19:34 0:00 [ksoftirqd/2]

root 13 0.0 0.0 0 0 ? S 19:34 0:00 [migration/3]

root 15 0.0 0.0 0 0 ? S 19:34 0:00 [ksoftirqd/3]

root 16 0.0 0.0 0 0 ? S< 19:34 0:00 [cpuset]

root 17 0.0 0.0 0 0 ? S< 19:34 0:00 [khelper]

root 18 0.0 0.0 0 0 ? S 19:34 0:00 [kdevtmpfs]

root 19 0.0 0.0 0 0 ? S 19:34 0:00 [kworker/u:1]

root 21 0.0 0.0 0 0 ? S 19:34 0:00 [xenwatch]

root 22 0.0 0.0 0 0 ? S 19:34 0:00 [xenbus]

root 162 0.0 0.0 0 0 ? S 19:34 0:00 [sync_supers]

root 164 0.0 0.0 0 0 ? S 19:34 0:00 [bdi-default]

root 166 0.0 0.0 0 0 ? S< 19:34 0:00 [kblockd]

root 176 0.0 0.0 0 0 ? S 19:34 0:00 [kworker/3:1]

root 178 0.0 0.0 0 0 ? S< 19:34 0:00 [md]

root 262 0.0 0.0 0 0 ? S< 19:34 0:00 [rpciod]

root 263 0.0 0.0 0 0 ? S 19:34 0:00 [kworker/2:1]

root 275 0.0 0.0 0 0 ? S 19:34 0:04 [kswapd0]

root 276 0.0 0.0 0 0 ? SN 19:34 0:00 [ksmd]

root 277 0.0 0.0 0 0 ? S 19:34 0:00 [fsnotify_mark]

root 281 0.0 0.0 0 0 ? S 19:34 0:00 [ecryptfs-kthr]

root 283 0.0 0.0 0 0 ? S< 19:34 0:00 [nfsiod]

root 284 0.0 0.0 0 0 ? S< 19:34 0:00 [cifsiod]

root 287 0.0 0.0 0 0 ? S 19:34 0:00 [jfsIO]

root 288 0.0 0.0 0 0 ? S 19:34 0:00 [jfsCommit]

root 289 0.0 0.0 0 0 ? S 19:34 0:00 [jfsCommit]

root 290 0.0 0.0 0 0 ? S 19:34 0:00 [jfsCommit]

root 291 0.0 0.0 0 0 ? S 19:34 0:00 [jfsCommit]

root 292 0.0 0.0 0 0 ? S 19:34 0:00 [jfsSync]

root 293 0.0 0.0 0 0 ? S< 19:34 0:00 [xfsalloc]

root 294 0.0 0.0 0 0 ? S< 19:34 0:00 [xfsmrucache]

root 295 0.0 0.0 0 0 ? S< 19:34 0:00 [xfslogd]

root 296 0.0 0.0 0 0 ? S< 19:34 0:00 [glock_workque]

root 297 0.0 0.0 0 0 ? S< 19:34 0:00 [delete_workqu]

root 298 0.0 0.0 0 0 ? S< 19:34 0:00 [gfs_recovery]

root 299 0.0 0.0 0 0 ? S< 19:34 0:00 [crypto]

root 862 0.0 0.0 0 0 ? S 19:34 0:00 [khvcd]

root 976 0.0 0.0 0 0 ? S< 19:34 0:00 [kpsmoused]

root 1016 0.0 0.0 0 0 ? S< 19:34 0:00 [deferwq]

root 1019 0.0 0.0 0 0 ? S 19:34 0:00 [kjournald]

root 1023 0.0 0.0 0 0 ? S 19:34 0:00 [kworker/1:1]

root 1044 0.0 0.0 0 0 ? S 19:34 0:00 [kauditd]

root 1077 0.0 0.0 2424 356 ? S ~~root 2692 0.0 0.0 2452 40 ? Ss 19:34 0:00 /sbin/dhclient

root 2759 0.0 0.0 10624 420 ? S

root 2784 0.0 0.0 1808 288 ? Ss 19:34 0:00 klogd -x

named 2825 0.0 0.1 58936 1032 ? Ssl 19:34 0:00 /usr/sbin/named

dbus 2847 0.0 0.0 2896 504 ? Ss 19:34 0:00 dbus-daemon –s

root 2884 0.0 0.0 23272 524 ? Ssl 19:34 0:00 automount

root 2903 0.0 0.0 7256 632 ? Ss 19:34 0:00 /usr/sbin/sshd

ntp 2917 0.0 0.5 4548 4544 ? SLs 19:34 0:00 ntpd -u ntp:ntp

root 2928 0.0 0.0 5344 160 ? Ss 19:34 0:00 /usr/sbin/vsftp

root 2964 0.0 0.0 4676 572 ? S 19:34 0:00 /bin/sh /usr/bi

root 3018 0.0 0.0 0 0 ? S 19:34 0:00 [flush-202:0]

mysql 3057 7.9 1.7 126808 13296 ? Sl 19:34 14:23 /usr/libexec/my

root 3089 0.0 0.0 9372 696 ? Ss 19:34 0:00 sendmail: accep

smmsp 3097 0.0 0.0 8284 336 ? Ss 19:34 0:00 sendmail: Queue

root 3106 0.0 0.0 2044 152 ? Ss 19:34 0:00 gpm -m /dev/inp

root 3115 0.0 0.1 27820 1320 ? Ss 19:34 0:00 /usr/sbin/httpd

root 3123 0.0 0.0 5380 552 ? Ss 19:34 0:00 crond

xfs 3141 0.0 0.0 3308 436 ? Ss 19:34 0:00 xfs -droppriv -

apache 3235 0.0 4.6 56924 35484 ? S 19:34 0:03 /usr/sbin/httpd

apache 3239 0.0 5.7 72740 44192 ? S 19:34 0:04 /usr/sbin/httpd

apache 3240 0.0 4.4 56636 34068 ? S 19:34 0:02 /usr/sbin/httpd

apache 3241 0.0 4.1 52836 31660 ? S 19:34 0:01 /usr/sbin/httpd

apache 3242 0.0 3.9 52800 30372 ? S 19:34 0:01 /usr/sbin/httpd

apache 3243 0.0 4.0 52788 31428 ? S 19:34 0:02 /usr/sbin/httpd

apache 3244 0.0 4.4 56924 34556 ? S 19:34 0:02 /usr/sbin/httpd

apache 3245 0.0 4.5 57196 34828 ? S 19:34 0:03 /usr/sbin/httpd

root 3264 0.0 0.0 2408 180 ? Ss 19:34 0:00 /usr/sbin/atd

root 3279 0.0 0.2 26680 2192 ? SN 19:34 0:00 /usr/bin/python

root 3281 0.0 0.0 2704 536 ? SN 19:34 0:00 /usr/libexec/ga

root 3282 0.0 0.2 19420 1660 ? Ss 19:34 0:00 /usr/bin/perl /

apache 3491 0.0 3.3 52792 26060 ? S 19:35 0:01 /usr/sbin/httpd

apache 3492 0.0 4.8 59656 37352 ? S 19:35 0:02 /usr/sbin/httpd

apache 3493 0.0 4.5 56956 34564 ? S 19:35 0:01 /usr/sbin/httpd

apache 3494 0.0 4.0 52788 31128 ? S 19:35 0:03 /usr/sbin/httpd

root 5343 0.0 0.0 3028 624 ? Ss 19:49 0:00 login – root

root 5796 0.0 0.0 4808 604 hvc0 Ss 19:53 0:00 -bash

root 6054 0.0 0.0 0 0 ? S 19:55 0:00 [kworker/0:2]

root 6583 0.0 0.0 4320 352 hvc0 S+ 20:00 0:00 less

root 21913 0.0 0.0 0 0 ? S 21:53 0:00 [kworker/2:0]

root 22407 0.0 0.0 0 0 ? S 21:57 0:00 [kworker/3:0]

root 23117 0.0 0.0 0 0 ? S 22:02 0:00 [kworker/1:0]

root 24625 0.0 0.3 10264 2656 ? Ss 22:13 0:00 sshd: root@nott

root 24628 0.0 0.1 6692 1536 ? Ss 22:13 0:00 /usr/libexec/op

root 26703 0.0 0.3 10108 2932 ? Rs 22:28 0:00 sshd: root@pts/

root 26812 0.0 0.1 4812 1452 pts/0 Ss 22:29 0:00 -bash

root 27493 0.0 0.0 0 0 ? S 22:34 0:00 [kworker/1:2]

root 27494 0.0 0.1 4400 932 pts/0 R+ 22:34 0:00 ps aux

Free usage stats

[root@li21-298 ~]# free -m

total used free shared buffers cached

Mem: 750 497 252 0 6 77

-/+ buffers/cache: 413 336

Swap: 511 87 424

[root@li21-298 ~]# free

total used free shared buffers cached

Mem: 768004 490708 277296 0 8680 79892

-/+ buffers/cache: 402136 365868

Swap: 524284 73136 451148

List of running processes sorted by memory use

ps -eo pmem,pcpu,rss,vsize,args | sort -k 1 -r | less

%MEM %CPU RSS VSZ COMMAND

4.9 0.0 38240 59656 /usr/sbin/httpd

4.7 0.0 36324 72740 /usr/sbin/httpd

4.6 0.0 35724 56940 /usr/sbin/httpd

4.6 0.0 35512 56924 /usr/sbin/httpd

4.2 0.0 32676 56924 /usr/sbin/httpd

4.2 0.0 32312 56380 /usr/sbin/httpd

3.8 0.0 29604 52800 /usr/sbin/httpd

3.7 0.0 29024 52792 /usr/sbin/httpd

3.7 0.0 28992 52788 /usr/sbin/httpd

3.7 0.0 28664 52788 /usr/sbin/httpd

1.8 7.8 13928 127624 /usr/libexec/mysqld –basedir=/usr --datadir=/var/lib/mysql --user=mysql --log-error=/var/log/mysqld.log --pid-file=/var/run/mysqld/mysqld.pid --socket=/var/lib/mysql/mysql.sock

0.9 0.0 7260 52836 /usr/sbin/httpd

0.5 0.0 4544 4548 ntpd -u ntp:ntp -p /var/run/ntpd.pid -g

0.3 0.0 2940 10108 sshd: root@pts/0

0.3 0.0 2680 26680 /usr/bin/python -tt /usr/sbin/yum-updatesd

0.2 0.0 2208 53236 /usr/sbin/httpd

0.2 0.0 1660 19420 /usr/bin/perl /usr/libexec/webmin/miniserv.pl /etc/webmin/miniserv.conf

0.1 0.0 1460 4812 -bash

0.1 0.0 1320 27820 /usr/sbin/httpd

:

Type of MPM in use by Apache

[root@li21-298 ~]# httpd -V | grep 'MPM'

Server MPM: Prefork

-D APACHEMPMDIR="server/mpm/prefork"

Current settings in my httpd.conf file (/etc/httpd/httpd.conf)

MinSpareServers 5

MaxSpareServers 20

ServerLimit 256

MaxClients 256

MaxRequestsPerChild 4000

Current settings in mysql (located /etc/my.cnf)

[mysqld]

maxallowedpacket=50M

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

user=mysql~~

6 Replies

Search the forum for MaxClients - it's been discussed many many times.

# iostat

avg-cpu: %user %nice %system %iowait %steal %idle

6.11 0.00 2.29 1.00 0.75 89.84

Device: tps Blkread/s Blkwrtn/s Blkread Blkwrtn

xvda 11.79 289.42 58.40 509698 102848

xvdb 0.01 0.50 0.00 872 0

# iostat -d -x 2 5

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

xvda 0.65 4.58 8.64 3.02 281.50 60.80 29.36 0.18 15.87 4.58 5.34

xvdb 0.05 0.00 0.01 0.00 0.48 0.00 48.44 0.00 8.72 5.22 0.01

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

xvda 0.00 2.50 0.00 1.50 0.00 32.00 21.33 0.01 9.00 9.00 1.35

xvdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

xvda 0.00 5.50 0.00 11.50 0.00 136.00 11.83 0.75 65.39 14.30 16.45

xvdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

xvda 0.00 1.00 0.00 1.50 0.00 20.00 13.33 0.06 41.67 41.67 6.25

xvdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

xvda 0.00 2.00 0.00 4.50 0.00 52.00 11.56 0.11 25.22 25.22 11.35

xvdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

# mpstat

10:52:39 AM CPU %user %nice %sys %iowait %irq %soft %steal %idle intr/s

10:52:39 AM all 6.24 0.00 2.17 0.95 0.00 0.15 0.72 89.77 1230.74

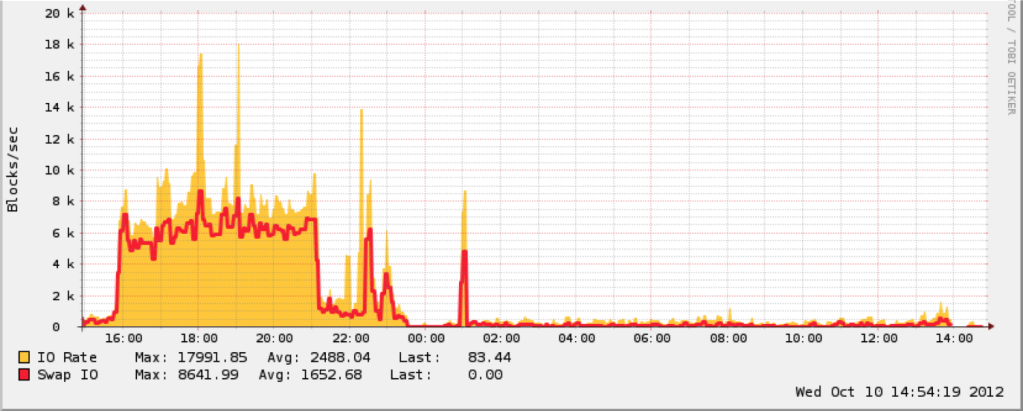

24Hr Avg I/O

[

[img]

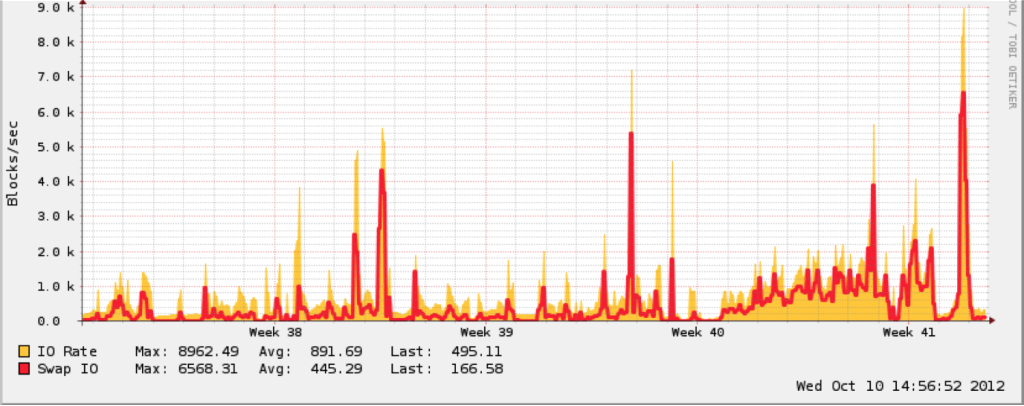

30Day Avg I/O