Streaming platforms scale to accommodate millions of concurrent viewers across diverse devices and network conditions, making efficient adaptive bitrate (ABR) streaming essential. In this blog post, we will explore the technical aspects of transitioning from client-side to server-side ABR, focusing on implementation details and performance optimizations.

Adaptive Bitrate Streaming Fundamentals

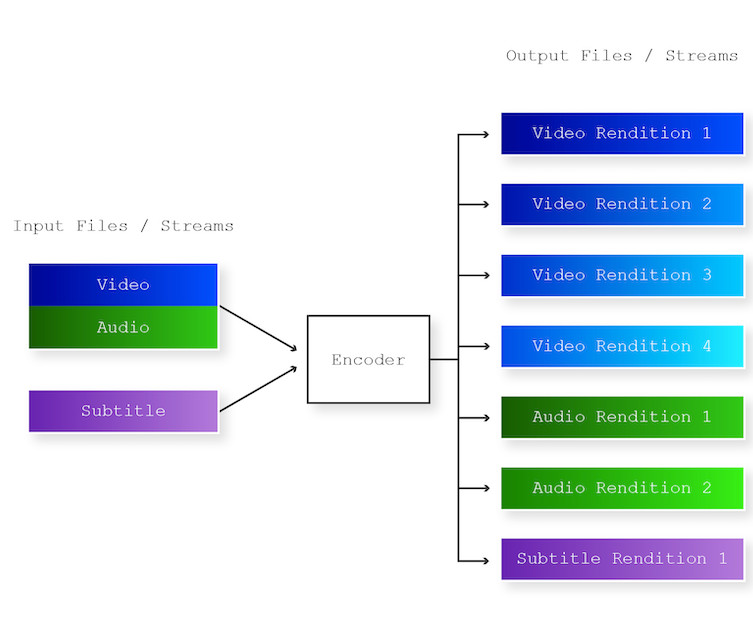

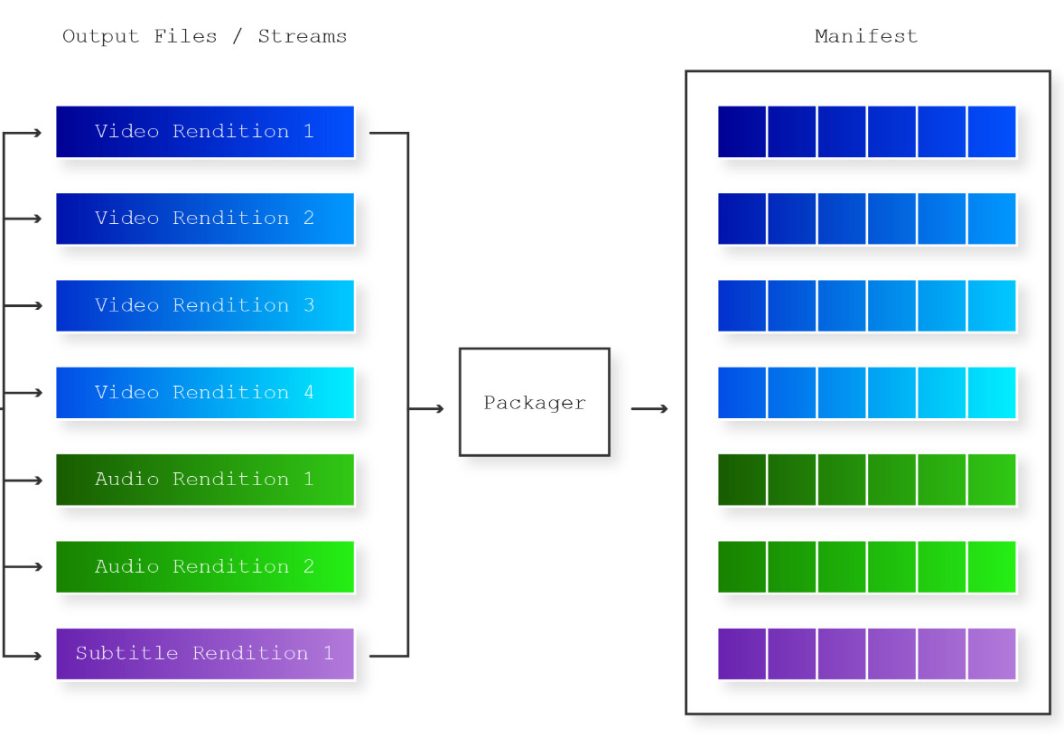

How exactly does adaptive bitrate streaming work? Let’s walk through each step. First, the video content is prepared by encoding it at multiple bitrates (e.g. 500kbps, 1Mbps, 2Mbps, etc.) and storing these different renditions.

A manifest file, like an M3U8 for HLS or MPD for DASH, contains information about the different bitrate streams that are available.

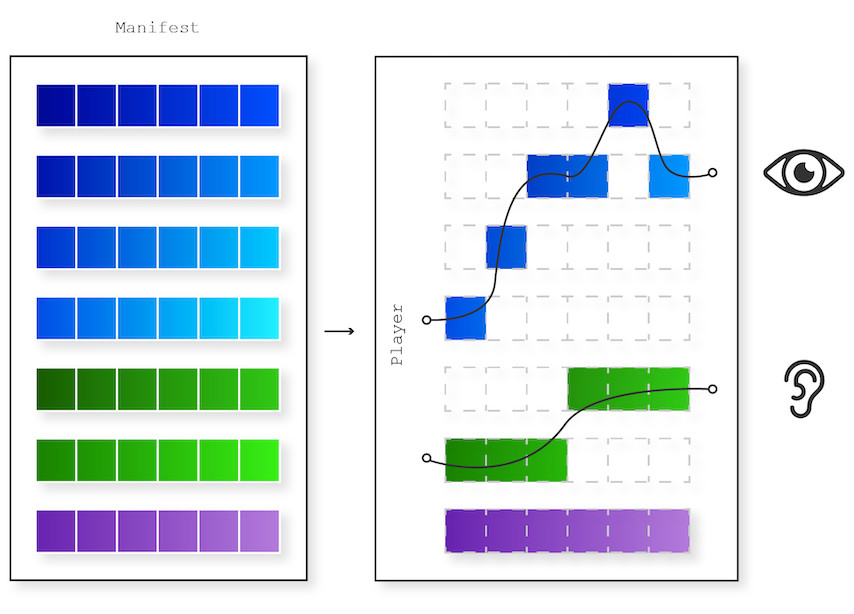

When a user starts playback, the client device downloads the manifest file to see the available bitrate options. The client selects an initial bitrate, usually starting with a lower bitrate for quicker startup.

As the video plays, the client device continuously monitors the network conditions and its internal buffer levels. If the network bandwidth becomes constrained, the client will request lower bitrate chunks to prevent rebuffering. Conversely, if the bandwidth improves, the client requests higher bitrate chunks for improved video quality. This bitrate switching happens by requesting new video segments at the updated bitrate level.

Challenges of Traditional Adaptive Bitrate Streaming (Client-Side ABR)

Traditionally, ABR streaming has been managed on the client side, where the device playing the video determines the best bitrate to use. However, this approach comes with some challenges. First, client devices vary widely in their processing power. An older phone might not be able to handle all the computing required to constantly adjust the video quality as smoothly as a brand new top-of-the-line model. That’s why sometimes when you’re watching a show on your phone, the video quality can fluctuate.

Another issue is how much internet connections can vary, even within a short period of time. Your device is having to constantly adapt to those changes, which can lead to really noticeable quality drops or frequent switching between resolutions that makes for a pretty jarring viewing experience. Especially if you live in an area with spotty internet, you might see a lot of buffering or quality dips.

There’s also the issue of latency – if there’s a delay in your device realizing your connection has changed, it might not adjust the bitrate quickly enough, leading to stalls or sudden quality drops.

Finally, all that constant adjusting and potential inefficiencies can lead to using more data than necessary. This can be a real problem for users on limited mobile data plans.

How Server-Side ABR Can Solve These Challenges

To solve these issues, instead of determining the appropriate bitrate selection on the client side, SSABR makes the selection on the server side.

Here’s how server-side adaptive bitrate streaming could work. First, the client sends an HTTP GET request for the manifest file. The request includes client metadata (e.g. device type, screen resolution, supported codecs). Then, the server parses the client metadata from the request headers and does some analysis (current CDN load, geographic distance to the client, historical performance for similar clients, etc.). Then, the server generates a custom manifest file tailored to the client’s capabilities and current network conditions, and includes only the bitrates deemed suitable for the client. The server will then select the optimal bitrate based on available bandwidth (estimated from RTT and throughput), content complexity (I-frame size, motion vectors), and client buffer level (reported via real-time feedback). Next, the server will deliver video segments encoded at the selected bitrate to the client device, which then decodes and plays them. Lastly, the server continuously monitors network conditions and viewer device capabilities. If conditions change during playback (e.g., network congestion occurs), the server may dynamically adjust the bitrate to ensure smooth playback without buffering or degradation in quality.

There are so many benefits to this server-side approach. Instead of the client device having to make the call of selecting the right bitrate on its own, the server has a much better holistic view of the network conditions, how complex the video content is, and what your device is capable of. With all that information, it can make more appropriate decisions about adjusting the bitrate up or down. That means smoother transitions between different quality levels and just an overall more consistent, buffer-free streaming experience for viewers.

Offloading the bitrate selection process to the server also reduces the processing burden on client devices. This is particularly beneficial for resource-constrained devices, improving their performance and battery life.

Another benefit of server-side adaptive bitrate streaming is that the server can continuously monitor network conditions in real-time, adjusting the bitrate more accurately and quickly than client-side algorithms. So if it detects your connection is about to slow down, it can proactively lower the bitrate before you even experience any hiccups. And as soon as your connection speed picks up again, it’ll ramp that quality right back up. It can even analyze how complex the video content itself is at any given moment. Higher motion scenes with lots of action will get pumped up to a higher bitrate for better quality, while calmer scenes can stream at lower bitrates without looking worse.

Lastly, server-side adaptive bitrate streaming can be very cost-effective for streaming providers. More efficient bitrate management means lower bandwidth costs across the board. Streaming providers can deliver high-quality video without incurring excessive data transfer fees, while users can enjoy their favorite content without worrying about data overages.

In a nutshell – higher quality streams, smoother playback, better device performance, and cost savings are great reasons to start considering server-side adaptive bitrate streaming.

Performance Optimizations

To maximize the benefits of server-side ABR, there are a few performance optimizations you should consider implementing. The first is edge computing. By deploying ABR logic to edge servers, you can drastically reduce the distance that data needs to travel, which in turn minimizes latency during video playback. This proximity to end users not only results in smoother streaming with less buffering and lag time, but it also ensures faster adjustments in bitrate. Additionally, reducing the strain on central servers can lead to improved scalability and overall network efficiency, allowing you to handle higher traffic loads more easily.

Another important performance optimization involves implementing effective caching strategies. By intelligently caching popular video segments at various bitrates, you can significantly reduce the load on your server and improve streaming performance. To achieve this, you can utilize probabilistic data structures, such as Bloom filters, which efficiently track and predict the popularity of different segments. This allows the system to store and serve frequently accessed content more quickly, reducing latency for end users and minimizing the need to fetch data repeatedly from the origin server. Additionally, this approach can help optimize bandwidth usage, leading to a more scalable and efficient streaming architecture.

Benchmarking and Monitoring Your Streaming Experience

After you migrate to server-side adaptive bitrate delivery, you’ll want to quantify the improvements through key performance indicators (KPIs) that measure both user experience and system efficiency. There are a few key performance indicators that are important.

The first is startup time, which measures the time from a user’s play request to the first frame rendered. A shorter startup time is vital for a seamless experience, as long delays can lead to user frustration and increased churn. Another important KPI is the rebuffer ratio, calculated by dividing the total rebuffer time by the total playtime, then multiplying by 100. This ratio reflects the percentage of time a video spends buffering during playback, and minimizing it ensures uninterrupted viewing. Bitrate Switching Frequency, which tracks the number of quality switches per minute, is another useful metric. Excessive bitrate switches can disrupt the user experience, making it essential to balance performance and stability. Average Bitrate measures the mean bitrate delivered over a session, providing insight into the overall video quality experienced by users. Higher bitrates generally mean better quality, but they should align with available bandwidth to prevent buffering. Lastly, VMAF Scores (Video Multi-Method Assessment Fusion) are perceptual quality metrics that ensure the delivered video quality matches the bitrate decisions made by the ABR algorithm. High VMAF scores indicate good visual quality relative to the bitrate, ensuring a high-quality viewing experience.

Startup time, rebuffer ration, bitrate switching frequency, average bitrate, and VMAF scores are all KPIs that you can use to fine-tune ABR algorithms and optimize user satisfaction.

Conclusion

Server-side adaptive bitrate streaming addresses many of the limitations of traditional client-side ABR, offering a more efficient and consistent streaming experience. By centralizing the decision-making process, server-side ABR improves performance, enhances quality control, and optimizes data usage.

In this blog post, we explored the challenges of client-side ABR and the compelling advantages of server-side solutions. If you are a developer at a streaming company and you want to learn more about optimizing your adaptive bitrate streaming solutions, you can use this link to apply to receive up to $5,000 in Linode credits to learn more.

Comments