If you’re a developer at a financial institution or bank, you know that speed and reliability aren’t just nice-to-haves—they’re essential. In the financial world, milliseconds can mean the difference between profit and loss. Whether it’s executing trades, processing transactions, or delivering real-time analytics, every second counts. Faster processing times translate directly into better user experiences, more transactions per second, and ultimately, more revenue.

One of our customers needed to process hundreds of millions of data keys while meeting strict performance and uptime SLAs. In this blog, we’ll take a look at how Akamai helped with ingesting high volumes of data with low latency and why it might be the right choice for you too.

Volume: Handling Massive Amounts of Data

The first “V in Big Data, Volume, refers to the sheer amount of data generated and collected. For a financial institution like a bank, handling this volume effectively is crucial due to the constant stream of transactions, account updates, customer interactions, and other financial activities.

Akamai constantly checks on how traffic is flowing to and from your data centers, detecting internet congestion, outages, or other issues that might affect your customers. This was something that made a big difference for our customer, who needed the ability to send users to the closest data center, or to high performing data centers to ensure low-latency. They set up custom rules based on real-time data to ensure that traffic is as high performing as possible. .

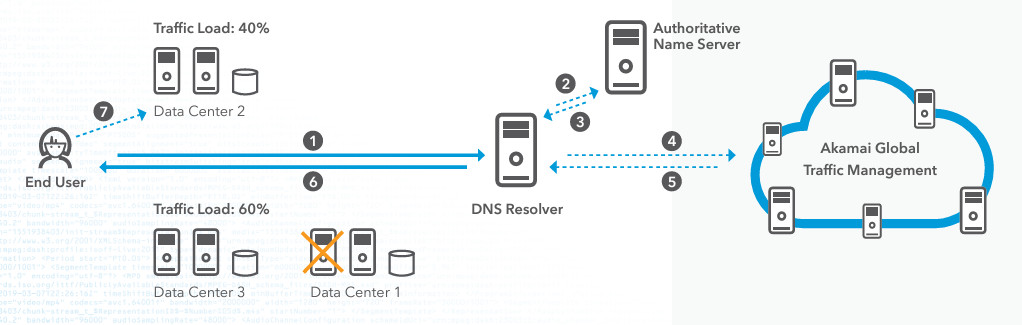

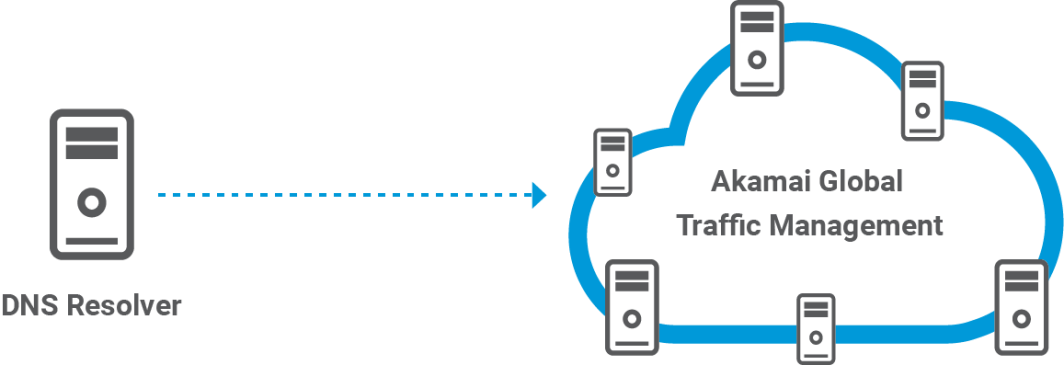

Let’s take a look at an example workflow that demonstrates how Akamai’s Global Traffic Management (GTM) handles massive volume. Akamai GTM distributes incoming traffic among multiple data centers.

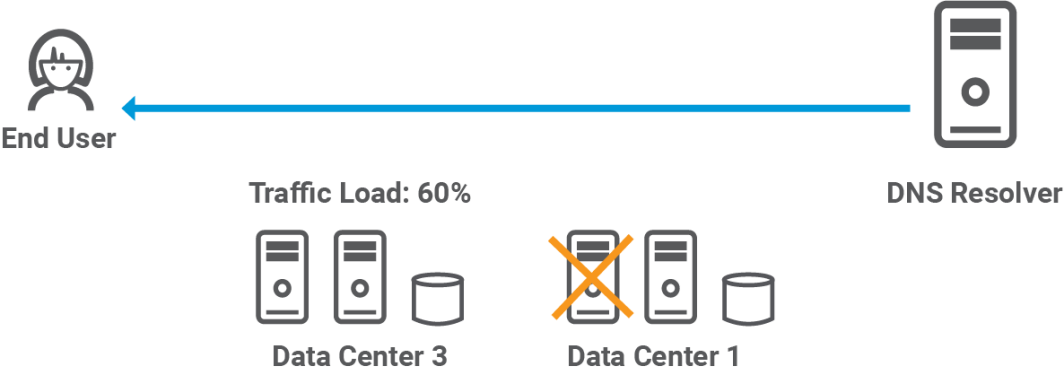

In this example, Data Center 2, on the top, handles 40% of the traffic load, while Data Center 3, on the bottom, handles the remaining 60% of traffic. Let’s say for this example that Data Center 1 is down because there is a power outage. This distribution ensures that no single data center becomes a bottleneck, maintaining high performance and availability. GTM will also intelligently direct traffic based on current load conditions, directing more traffic to Data Center 3 since Data Center 1 is down.

Let’s walk through this example step by step. First, the end user will send a request to access the bank’s mobile application or website.

Then, the DNS resolver, using standard DNS procedures, requests the IP address from the site’s name server.

Here’s where Akamai comes into play. Instead of getting a direct IP address, the resolver gets a CNAME alias.

The name server will return the best route to the bank for this user.

GTM looks at all those custom rules you’ve set up, checks its global network of sensors, and will return a list of IP addresses for the optimal data center.

This could be an Akamai data center, a cloud provider, or even one of your own data centers. The resolver then passes this optimized IP address back to the user’s browser.

Lastly, the user connects to your website, and they probably have no idea about the complex dance that just happened behind the scenes because it all happened in milliseconds. Not only does this take a lot of the headache out of managing global traffic, but it ensures high availability. Plus, with the ability to set up custom rules, you have the flexibility to optimize for whatever metrics matter most to your bank.

This dynamic load balancing helps prevent overloading of any single data center, ensuring continuous service even during peak times. Additionally, we see failover support. Because Data Center 1 is down, Akamai’s GTM automatically redirects traffic to the available data centers (Data Center 2 and Data Center 3) without user intervention. This failover capability is crucial for banks, ensuring that their services remain accessible even if one or more data centers experience issues.

Velocity: Speed of Data Processing

The second V in big data is velocity. Velocity is all about the speed at which data is generated, processed, and analyzed. By strategically placing computing resources closer to end-users and data sources, Akamai drastically reduces the time it takes for data to traverse the network. This approach brings computation and data storage closer to the point of need, resulting in substantially faster data processing times. For this financial institution, when a customer initiates a fund transfer through their mobile app, the transaction request traditionally would need to travel to a central data center, possibly located thousands of miles away. With Akamai’s edge computing, the initial processing of this request can happen at a nearby edge server. This reduced the transaction processing time from seconds to milliseconds.

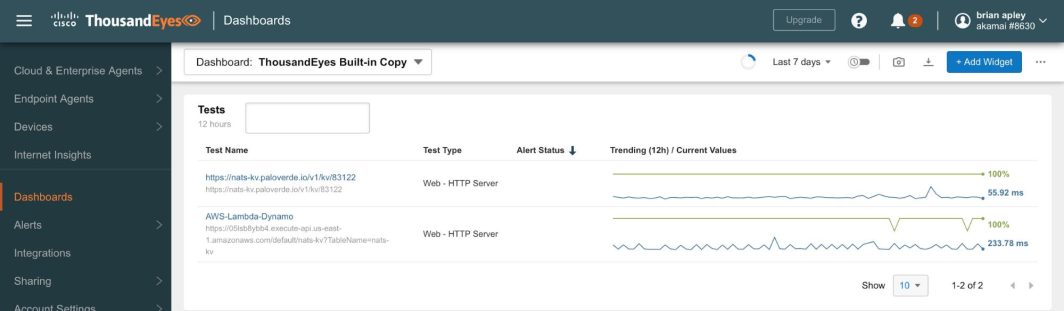

Let’s compare performance using Akamai vs. AWS. For this comparison, let’s use the ThousandEyes monitoring service, and their 11 US-based test agents. Our team configured a control test requesting a similar-sized object via HTTPS from AWS API Gateway, in front of a Lambda function that returned a KV object from DynamoDB, hosted in US-East-1. The experiment test requested an object via HTTPS from a NATS.io cluster delivered via Akamai compute.

Now, let’s compare the response times from both AWS and Akamai. From the dashboard above, let’s analyze a few key performance metrics. The total download time (7 day average) for Akamai’s NATS.io object was 55 ms. For AWS’s DynamoDB object, it was 233 ms. Akamai had a 76% reduction in total download time compared to AWS, highlighting its superior velocity in data processing.

| Akamai NATS.io Object | AWS DynamoDB Object | |

| Total Download Time | 55 ms Breakdown:DNS resolution: ~3 msTLS handshake: ~15 msTCP connect: ~7 msTime to First Byte (TTFB) : ~20 msContent download: ~10 ms | 233 ms Breakdown:DNS resolution: ~10 msTLS handshake: ~40 msTCP connect: ~25 msTime to First Byte (TTFB): ~120 msContent download: ~38 ms |

| Wait Times | 5-8 ms | 30-50 ms |

| Throughput | ~100 Mbps | ~40 Mbps |

| Latency (Round Trip Time) | ~15 ms | ~60 ms |

| Time to Interactive (TTI) | ~70 ms | ~280 ms |

| Cache Hit Ratio | 98.5% | 92% (CloudFront) |

| Global Server Load Balancing (GSLB) Efficiency | 99.99% | 99.95% |

From the above metrics, we can conclude that Akamai’s architecture provides a more robust foundation for meeting stringent SLAs in financial institutions. The significantly lower TTI (70 ms vs. 280 ms) ensures a more responsive user experience, critical for financial applications. Akamai’s architecture not only sped things up but also provided the reliability necessary to meet the financial institution’s strict SLAs.

Variety: Managing Data Types

The last V in big data stands for variety. Variety refers to the different types of data that organizations must handle. If you work at a financial institution or a bank, you probably need to juggle a multitude of data types and sources every day. You have the structured data, like the transaction records, account balances, and customer information. Then you have the real-time streaming data, like stock market feeds and online payment transactions, that change constantly.

Akamai’s Global Traffic Management is crucial in managing these different data types. For high priority transaction data, like fund transfers, GTM can constantly monitor network conditions to route these requests to your fastest-responding data centers. Now, for your static content (account statements or info about your financial products), which don’t change that often, GTM directs these requests to edge servers closer to the user. This takes a load off your central systems and speeds up access times.

GTM combines all of this data – network conditions, server health, your custom rules, and current traffic patterns – and uses it to make split-second decisions on how to route each incoming request. It’s constantly optimizing and re-optimizing these routes, ensuring that each type of data – whether it’s a simple balance check or a complex international wire transfer – gets handled in the most efficient way possible. This level of intelligent routing means your bank can maintain high performance and reliability, even as the volume and complexity of your digital transactions continue to grow.

To Sum Up

When milliseconds matter and data complexity is high, Akamai’s edge-focused solutions provide the speed, reliability, and efficiency required to keep your business at the forefront. With its advanced GTM and intelligent caching, Akamai efficiently handles massive volumes of data quickly by distributing the load across its extensive network.

Engineers at financial institutions can transform their infrastructure with Akamai. By leveraging Akamai’s extensive global network, you can achieve unparalleled speed and reliability, keeping your users satisfied and your operations running seamlessly.

If you’re curious about how Akamai’s cutting-edge technology can help your applications run smoothly and efficiently, you can apply for up to $5,000 in credits to meet your strict performance SLAs, and provide a great experience for your customers.

Comments